As a part of a SEO service package, technical SEO deals with a website’s technical components like sitemaps, page speed, URL, schema, navigation, and more.

The bottom line: Every online business needs a fully optimized website to attract qualified leads. Technical SEO makes your website fast, mobile-friendly, user-friendly, and reliable.

In this blog, we explain technical SEO basics, so you can optimize your website, and, more importantly, know how to converse with your marketing team about this key facet of search engine optimization.

Technical SEO and Search Engines: How It Works

Search engines are software programs designed to help people who use keywords or phrases to find the information they’re looking for online.

Google says its mission is “to organize the world’s information and make it universally accessible and useful. That’s why Search makes it easy to discover a broad range of information from a wide variety of sources.”

Its propriety algorithms retrieve the most relevant and reputable sources of data possible to present to internet users.

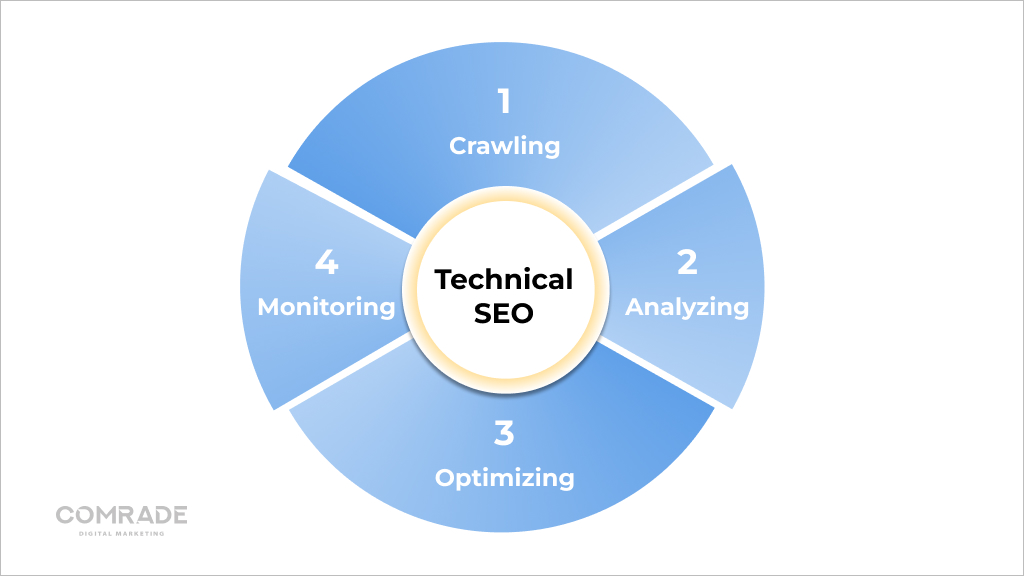

Technically, the process works like this:

Crawling

Sophisticated software called search engine web crawlers, also referred to as “robots” or “spiders”, scour the internet searching for content/URLs. They’ll crawl a website by downloading its robots.txt file, which contains rules about which URLs a search engine should crawl.

Because there isn’t a centralized registry of all existing web pages, search engines like Google constantly look for new and updated pages to add to their list of known pages. This is called “URL discovery.”

More than 547,200 new websites are created globally every day, hence the importance of Googlebots constantly crawling the web!

Spiders also crawl pages known to search engines to determine if any changes have been made. If so, they will update the index in response to these changes. For instance, when an eCommerce store updates its product pages, it might rank for new keywords.

Web crawlers calculated how frequently a web page should be re-crawled and how many pages on a site should be indexed via their crawl budget. This is determined by crawl rate limit and crawl demand.

Due to limited resources, Googlebots have to prioritize their crawling effort, and assigning a crawl budget to each website helps them do this. If a website’s technical SEO is up to scratch, it’s faster to crawl, increasing the crawl limit (The number of pages a bot crawls within a certain timeframe.)

Conversely, if a site slows down due to poor technical SEO, Googlebots crawl less. Logically, it doesn’t make sense to crawl a website with poor technical SEO as it won’t provide a positive user experience, which goes against Google’s key objectives.

However, Google has published the following about the crawl budget:

“Crawl budget…is not something most publishers have to worry about…new pages tend to be crawled the same day they’re published on. Crawl budget is not something webmasters need to focus on.”

Newer pages or those that aren’t well linked or updated may be affected by crawl budget. However, in most cases, a website with less than 1000 URLs that is regularly updated will be crawled efficiently most of the time.

Rendering

Rendering is the graphic encoding of information on web pages. In layman’s terms, it’s the process of “painting text and images on screen” so search engines can understand what the layout of a web page is about.

Search engines extract website HTML code and translate it into things like text blocks, images, videos, and other elements. Rendering helps search engines understand user experience and is a necessary step to answer relevant questions related to indexing and ranking.

Indexing

After a page is rendered, Google tries to understand what it is about. This is called indexing. During this process, Google analyzes the textual content (information) of web pages, including key content tags, keywords, alt attributes, images, videos, etc.

It also analyzes the relationship between different pages and websites by following external and internal linking, as well as backlinks. Therefore, the better a job you do at making your website easy to understand (investing in technical SEO), the higher the chances of Google indexing its pages.

Links are like neural pathways of the internet connecting web pages. They are necessary for robots to understand the connections between web pages and help contextualize their content. However, search bots don’t follow all URLs on a website, only those with dofollow links (Links from other websites that point back to yours and strengthen domain authority.)

Hence, the importance of link-building. When external links come from high-quality domains, they strengthen the website they link to, boosting its SERP rankings. Nevertheless, when crawlers find a webpage, they render the content of the page and keep track of everything—from keywords to website freshness—in Google’s Search index.

This index is essentially a digital library of trillions of web pages. So, interestingly, when you type a query into a search engine, you’re not directly searching the web; you’re actually searching a search engine’s index of web pages.

Ranking

When a user types in a query, Google has to determine which pages in its index best match what that user is looking for. There are over 200 ranking factors that can be summarized into five major categories:

- Query meaning: Google’s algorithm analyzes the intent of a user’s question using complex language tools built on past searches and usage behavior.

- Web page relevance: The primary determinant of web page relevance is keyword analysis. Keywords on a website should match Google’s understanding of the query a user asks.

- Content quality: Once Google matches keywords from numerous web pages, it reviews the content quality, website authority, individual PageRank, and freshness to prioritize where pages rank.

- Web page usability: Pages that are easy to use receive priority. Site speed, responsiveness, and mobile-friendliness play a part.

- Additional context and settings: Lastly, Google’s algorithm will factor in past user engagement and specific settings within the search engine. Some signals include the language of the page and the country the content is aimed at.

If you want more traffic and to rank higher on Google and other search engines, you have to pay attention to technical SEO.

How Technical SEO Improves Website Crawling and Indexing

At the most elementary level, search engines need to be able to find, crawl, render, and index your website’s pages. Therefore, improving technical aspects, i.e., technical SEO, should result in higher rankings in search results.

How to Improve Website Crawling

Crawl efficiency refers to how seamlessly bots can crawl all the pages on your website. A clean URL structure, reliable servers, accurate sitemaps, and robots.txt files, all improve crawl efficiency.

1. Set up Robots.txt

A robots.txt file contains directives for search engines and is chiefly used to tell crawlers which URLs they should explore.

Although this seems contrary to SEO objectives to prevent pages from being crawled, it is useful if you’re building a new website and have multiple versions of the same page and wish to avoid being penalized for duplicate content.

You can also use a robots.txt file to prevent servers from being overloaded when crawlers load multiple pieces of content at once, as well as block private pages from being indexed.

If your website is large, and you’re experiencing difficulty getting all your pages indexed, a robot.txt file will help bots spend time on pages that matter.

2. Choose Good Hosting

Hosting affects website speed, server downtime, and security which factor into technical SEO performance. A good web host is reliable, available, and comes with efficient customer support.

We know website hosting is expensive, but the truth is you get what you pay for. We’ve seen clients make common mistakes like opting for freebies, which come with an influx of pop-up ads that slow page loading time or they select a cheaper option that compromises data security.

Trusted web hosts guarantee 99.9% uptime. Hence, your web host should have potent servers with a resilient infrastructure. A proper hosting solution ensures the security, accessibility, and reliability of your online services.

Get a complimentary, no-obligation digital SEO performance audit.

3. Delete Useless and Redundant Pages

Search Engine Land recently published an article on the looming impossibility of Google indexing every page of every website.

This isn’t necessarily all doom and gloom, as low-quality web pages shouldn’t really be in search engine results anyway because they don’t provide any value to visitors and waste a search bot’s precious time (crawl budget).

Despite this, we often come across Index bloat, particularly on eCommerce websites with large product inventories and customer reviews.

Typically, search engine spiders index these undesirable pages and erode relevancy scores, which may result in Google ignoring more important pages.

Therefore, conducting regular page audits and keeping a website clean ensures bots only index important URLs.

4. Avoid Duplicate Pages

Duplicate pages dilute link equity and confuse search engines because they don’t know which web pages to rank, meaning you typically land up competing against yourself in SERPs. If you have duplicate pages on your website, it’s likely that both pages will perform poorly.

Duplicate content occurs both on-site and off-site. Off-site duplicate content is difficult to control. To get rid of off-site duplicate content (usually content marketing material like blogs or product pages), you can submit a copyright infringement report to Google requesting it remove the duplicate page from its index.

In most cases, on-site duplicate content isn’t a malicious attempt at manipulating search engine rankings, but rather due to poor site architecture and website development (See: indexing section for more detail).

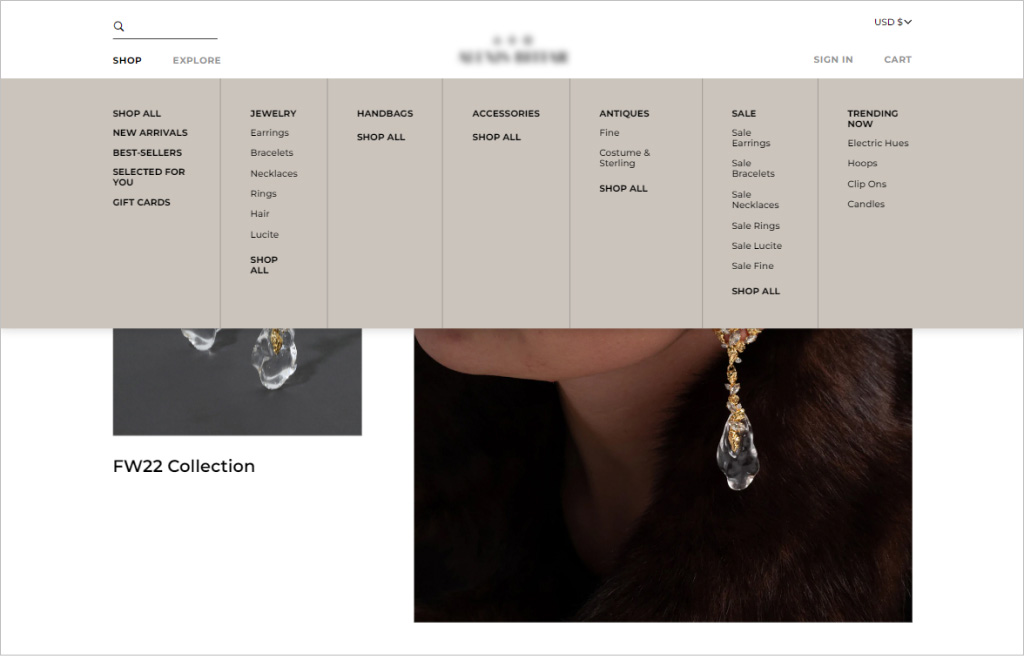

When we speak about website navigation, we’re referring to the link structure and hierarchy of a website. Good website navigation gets users where they need to be in as few clicks as possible.

As a general rule of thumb, your website’s URL structure should allow visitors to land on any page and find what they need within three clicks. Beyond a consistent design aesthetic, navigation menus that work best have a clear hierarchical structure, with clickable subcategories included in the menu if necessary.

Order is important when it comes to navigation. Users are more likely to remember the first and last menu items. Hence, most eCommerce site navigation structures start with a shop page and end with a call to action like a checkout button.

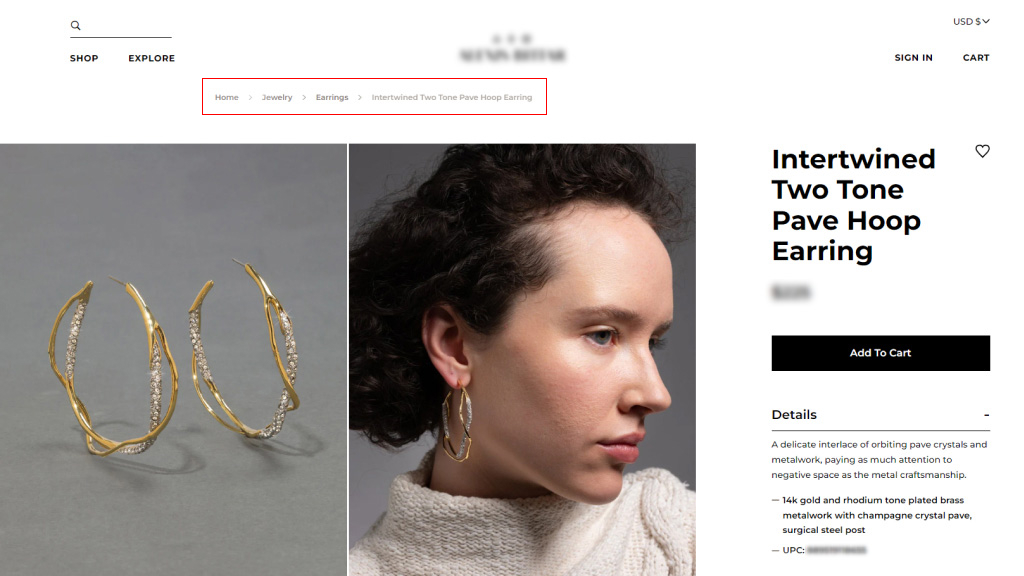

6. Use Breadcrumbs

Breadcrumbs are secondary navigation systems that show a user’s location on a website. They streamline navigation for complex eCommerce websites with many categories and product variation pages and are especially useful when users arrive at a site through an external link that doesn’t lead to the homepage.

Breadcrumbs supplement main navigation menus but can’t replace them. There are two types of breadcrumbs:

- Location-based, which helps users identify their current location in the site structure; and

- Attribute-based, which helps users identify important qualities of the page being viewed.

Using breadcrumbs encourages people to visit more website pages and reduces bounce rate, which is good for SEO overall. However, you will only use them if your site structure is complex, and you need to improve a user’s ability to navigate between category pages.

7. Provide a Sitemap.xml

A sitemap is a file that lists all the web pages of your website you want search engines to know about and consider ranking. While sitemaps are not prerequisites to having a website, they’re highly encouraged because they produce effective website crawling.

Sitemaps are great additions for new websites, with limited backlinks, that need to be indexed, as well as large sites where pages may be overlooked if metadata isn’t accurately updated. The same goes for archival sites where web pages don’t naturally link to each other.

Having a sitemap provides search engines with a useful guide to understanding what your website is about and how its pages are linked. Thus, it boosts SEO, rankings, and traffic.

How to Check and Fix Your Website’s Crawl Issue

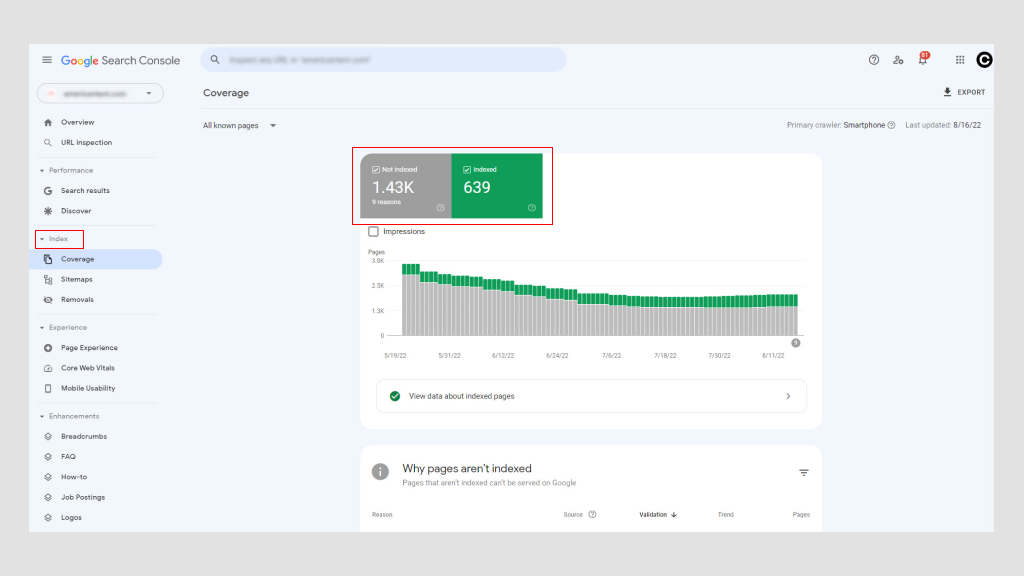

Using Google Search Console is the best way to determine if your website has any crawl issues. There are other tools as well, but the major benefit is Google is free. With Google Search Console, you can access “Crawl Errors” from the main dashboard.

Google divides these errors into two categories: Site Errors and URL errors. Site-level issues are more worrisome because they potentially damage your entire website’s usability, whereas URL errors are specific to individual pages, and less problematic, although they should still be fixed.

1. Possible Site Errors

- DNS errors: This is when a Googlebot can’t connect with your domain name due to a timeout or lookup issue. To fix this, check your DNS settings. The problem may be misconfigured DNS settings, a bad internet connection, or an outdated browser.

- Server connectivity errors: Usually, when a site takes too long to respond, the request times out. This means the Googlebot connected to your website but couldn’t load the page due to a change in network configuration, Wi-Fi disconnection, or an unplugged network cable.

- Robots.txt fetch errors: This is when a Googlebot cannot retrieve a robots.txt file. Updating your robots.txt file and letting bots re-crawl your pages should solve this issue.

2. Possible URL Errors

- Not found (404): If Google tries to crawl a page that no longer exists it displays a 404 error. A simple way to fix this is to redirect the page to another one.

- Not followed: This is when a bot could not completely follow URLs on your website. JavaScript, cookies, Session IDs, DHTML, or Flash are possible culprits.

- 500 internal server error: More often than not, these errors occur when a web server is overloaded and are solved by contacting your web host provider. If by any chance you’re running a WordPress site, you’ll want to check third-party plugins.

How to Check and Fix Your Website’s Indexing Issues

Indexing is Google’s way of gathering and processing all the data from your web pages when it crawls the web. It allows search engines to optimize speed and performance when matching users’ queries with relevant information. As such, Google displays pages of results within seconds!

Google uses a tiered indexing system where the most popular content is indexed on faster, more expensive storage. Hence, the importance of keyword-rich content.

Common indexing issues webmasters face and their solutions include:

1. NoIndex Errors

It’s possible to prevent spiders (bots) from crawling a page by including a noindex meta tag or header in the HTTP response. When a Googlebot comes across this tag, it will drop the page from Google Search results, regardless of whether other sites link to it.

There are good reasons to use a noindex tag on a web page or a portion of it, for example:

- To prevent duplicate content.

- To keep admin and login pages for internal use only.

- To avoid paid landing pages from being indexed. If you’re running a lead capture campaign, you don’t need hundreds of duplicate thank-you pages to be indexed.

A nonindex tag helps define valuable and curated content from other pages, ensuring the most important pages are available in SERPs. However, nonindex errors have negative consequences, especially when you want a page to be indexed.

If Google crawls a page but doesn’t index it, this can mean two things. Either the page is low quality, or there are too many URLs in the crawling cue, and the search engine doesn’t have enough budget to get through them.

Due to the eCommerce boom, it’s common for product pages to experience nonindex errors. To combat this, make sure product pages are unique (not copied from external sources), implement canonical tags to consolidate duplicate content, and use noindex tags to block Googlebots from indexing low-quality sections of your website.

If you have noindex issues due to many URLs, you’ll want to optimize your crawl budget by using robot.txt files to direct Google to the most important pages.

The best way to resolve noindex errors is to remove the error and resubmit your website’s sitemap through Google Search Console. The time it takes to identify nonindex errors varies (typically a decline in traffic), which is why regular monitoring of website performance is paramount.

2. Incorrect Hreflang Tags

Google introduced Hreflang tags in 2011 to help it cross-reference pages similar in content but with different target audiences (usually determined by language). They don’t necessarily increase traffic, but instead, make search engines swap the correct version of a web page into SERPs to serve the right content to the right users.

Below is how hreflang tags are written.

<link rel=”alternate” hreflang=”x” href=”https://example.com/blog/de/article-name/” />

Their syntax is broken down into three parts:

- link rel = “alternative”: Indicates to the search engine it’s an alternative version of the page.

- hreflang=”x”: Specifies the language.

- href=”https://example.com/blog/de/article-name: The alternative page where the URL is.

Given the finicky nature of hreflang tags, most problems are caused by typos and using incorrect codes and non-existent links.

If the code is incorrect, Google bots will simply ignore the attribute. You’ll have to revise the hreflang tag attribute to detect errors which you can do using Google Search Console.

3. Websites That Aren’t Mobile-Friendly

In 2019, Google switched to mobile-first indexing by default for all new websites. Historically, it primarily used the desktop version of a page’s content when evaluating content, however, more than 50% of global search queries come from mobile devices, so it makes more sense to analyze the mobile version of any given website content.

Websites ill-adapted for mobile won’t rank as high in SERPs. Today, having a mobile-friendly website is one of digital marketing’s technical SEO fundamentals.

There’s a lot you can do to make your website accessible for mobile users. Optimizing images, search features, and navigation for mobile boost user experience.

For instance, using drop-down tabs or hamburger navigation menus streamlines the buyer’s journey. CTA buttons should be easily clickable with fingers, and text should be appropriately sized for smaller screens.

On the technical SEO front, businesses can leverage browser caching, minify JavaScript and CCC, compress images to decrease file size, and utilize Google’s AMP pages to make sure they’ve followed the correct protocol.

4. Duplicate Content

As we mentioned previously, duplicate content adversely affects search engine optimization. Search engines can’t tell which version is the original, and when they choose the duplicate over the original you lose out on link equity and PageRank power.

If your website contains multiple pages with largely identical content, there are several ways to indicate preferred URLs.

- Canonicalization: This is the process of using canonical tags to direct search engines to the right pages for indexing. Essentially, they tell bots which pages you want to appear in SERPs.

- Utilize 301s: A 301 is an HTTP status code that signals a permanent redirect from one URL to another. It passes all the ranking power from the old URL to the new one.

- Be consistent: Keep your internal links consistent, and ensure each page has a unique and clean URL.

- Syndicate carefully: Estimates reveal up to 29% of the web contains duplicate content. It’s unsurprising considering product page similarity and content syndication. For this reason, you should ensure the sites your content is syndicated on include a link back to your original web page.

- Minimize similar content: Consider consolidating similar pages. For instance, if you have an eCommerce store that sells different color t-shirts, but the product information is basically the same, you should have one product page with different product images.

The best solution for duplicate content is to avoid it altogether and create unique, keyword-rich content for your website and affiliated marketing material.

5. Slow Page Speed

Page loading times on websites with less than 10,000 pages don’t affect crawl rate too much, however, large eCommerce sites exceeding 10,000 have a massive impact on crawling. Yet, even if your website is small, page speed matters because it’s a search engine ranking factor.

Pages that take longer than three seconds affect user experience. Anything longer and people will grow frustrated and abandon your website. Thus, one second can make a difference between making or losing a sale!

Slow page speed potentially means fewer pages crawled by Google, lower rankings and conversions, and higher bounce rates. You can use Google’s PageSpeed Insights to determine what needs to be improved.

Generally, there are four main culprits:

- Large images that are incorrectly sized or aren’t compressed

- JavaScript isn’t loaded asynchronously

- Cumbersome plugins or tracking scripts (code that monitors the flow of website traffic.)

- Not using a content delivery network (CDN).

Improving web page speed can mean several things, from enhancing your website design to resizing images or switching to a mobile-friendly website. The solution may be simple or more complex, inducing a compound effect in your entire site.

Still, regardless of the problem, it’s vital to fix technical SEO issues related to page speed. Every so often, it’s possible to do this yourself, but in most cases, you will need to hire a technical SEO expert as it may involve various degrees of coding.

How to Check If Your Web Pages Are Indexed

Just copy the URL of your web page from the address bar and paste it into Google. If your webpage pops up in search results, it’s been indexed. If nothing comes up, it hasn’t been indexed.

Alternatively, you can also use Google’s URL Inspection tool. It inspects a page’s URL and provides insights into discoverability, accessibility, and indexability.

And remember, just because web content is indexed by Google does not mean it’s ranking.

The fact that your URL is indexed by Google doesn’t necessarily mean it’s ranking. For a URL to rank, it often takes more than just the fact that Google was able to index it.

Your web pages must have high-quality content that satisfies user intent and meets search engines’ technical requirements.

How Technical SEO Improves Rendering

The rendering process allows search engine spiders to see the same web pages you do in your browser as it loads, i.e., from a visitor’s perspective. All search engines have to render pages before they can index them.

While external factors come into play, the foundation for creating a smooth rendering experience is largely the website developer and technical SEO’s responsibility.

Technical optimization is challenging because not only does the backend of your website (what users don’t see online) needs to be coded in a way that makes it easy for search engines to crawl, index, and render, but it also has to perform better and faster than your competitors.

How to Help Google Render Your Website Correctly

1. Don’t Use Too Much JavaScript

A brief explanation of HTML, CSS, and JavaScript:

- HTML: A formatting system used to display material retrieved over the internet. HTML provides the basic structure of sites modified by technologies like JavaScript and CSS.

- CSS: An acronym for Cascading Style Sheets, CSS is a technology that controls the presentation, formatting, and layout of a website.

- JavaScript: A text-based script language used to control the behavior of different elements on web pages.

JavasScript takes longer to render, impacting crawl budget, meaning it takes more time to crawl and index web pages. There are many reasons why JavaScript may prevent your website from rendering correctly.

One of the most common is when JavaScript files are blocked by robots.txt files. This prevents Google and other search engines from fully understanding what your website is about.

From a technical SEO standpoint, you can minify code, compress website assets and distribute static assets with a CDN that serves them from a server closer to your website users. This may reduce page download latency.

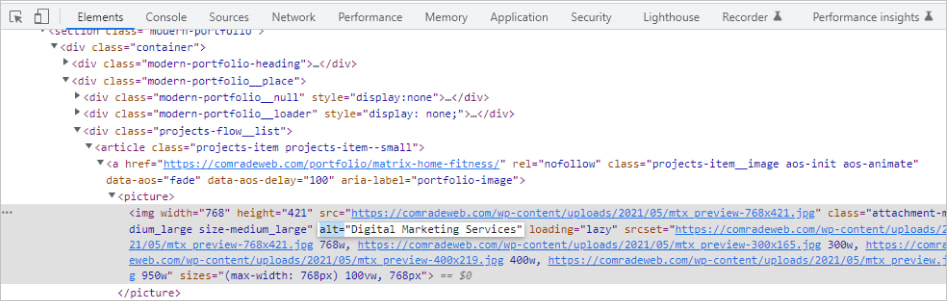

2. Use Alt Attributes

Image optimization is often overlooked when it comes to search engine optimization, yet it improves page load speed and user experience while boosting SERP rankings.

Alt tags are of particular importance because they help display text when an image can’t be rendered by a browser due to technical errors like poor bandwidth connection.

Instead, they textually describe what the image should be showing. If your website doesn’t have any alt attributes, search engines will have no idea what your image was meant to display.

There is a technique to writing good alt text. For example, let’s say you have an image of a cat playing with a ball of string.

- Bad description: cat playing

- Good description: black cat playing with a ball of string

Always describe images as objectively as possible. Provide context but don’t use phrases like “image of” or “picture of,” and avoid keyword stuffing.

You can even use alt tags for buttons. Such descriptions might read something like “apply now” or “get a quote.”

Some SEO-friendly advice: Avoid using images in place of words. Search engines can’t “read” as humans can, so using an image instead of the actual text is confusing for crawlers and can hurt rankings.

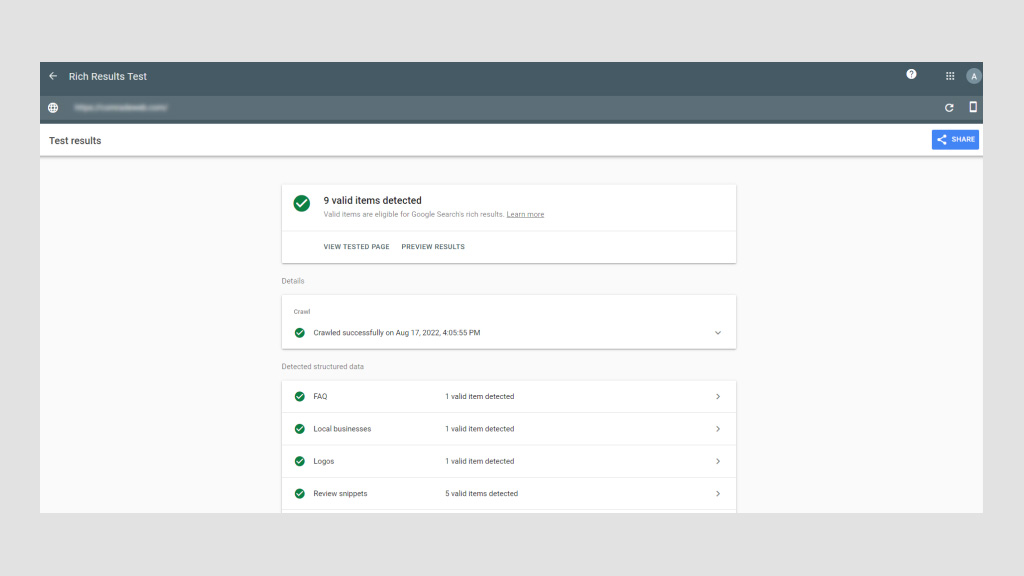

3. Use Schema Data

Structured data is information organized at a code level according to specific rules, guidelines, and vocabularies. It communicates specific page information, so search engines can render and index it correctly.

The way structured data is implemented is in the form of schema markup. Once added to a webpage, schema markup gives a clearer definition and structure to website content. It’s essentially a way to describe your site to search engines using a specific code vocabulary.

For Google specifically, it makes your content more likely to be displayed as a rich result or featured snippet, which are page listings enriched with additional visual or interactive elements that make them stand out.

Rich results appear at the top of SERPs above traditional text results. So, even if your page doesn’t rank first, by using scheme data, your website can still be the first thing users see on SERPs.

For example, let’s say you sell books online. With the correct schema markup, you can display the author, a summary, and review stars as a rich snippet. There are all kinds of structured data that you can use for movies, reviews, FAQs, recipes, and more, for instance.

Structured data is important for technical SEO because it makes it easier for Google and other search engines to understand what your web pages are about. Moreover, it increases your click-through rate, which leads to increased traffic and even higher rankings.

4. Avoid HTML Code Mistakes

Too many HTML errors prevent search crawlers from parsing (analyzing) web content and cause them to prematurely leave web pages.

- Using Flash or JavaScript menus without HTML: Your website menu script should contain HTML alternatives so browsers without the ability to translate Flash or JavaScript can still let users navigate your pages. If a crawler can’t “read” links due to Flash or JavaScript they won’t have any value. Thus, HTML menus make your web pages more compatible.

- Using URLs with “unsafe” characters: Search engines struggle to render URLs with special symbols like “&” or “%”. These types of characters are unsafe for different reasons, but it’s likely because they have common uses in different coding systems, causing search engines to misinterpret their meaning which can break the URL.

- Weak Title Tags and Meta Tags: Title tags should contain the web page’s target keyword, whereas meta tags should succinctly explain what the page is about. Both these tags are critical for search engine optimization SEO. The former improves ranking while the latter encourages clicks. Title tags should not exceed 76 characters, while description tags should be less than 156 characters.

HTML is rather technical, but luckily, there are technical SEO plugins to help you implement the correct tags without knowing how to code like a pro. Yoast and Moz are industry standards, used by professionals to ensure perfect HTML.

Technical SEO Checklist: How to Improve Technical SEO

If you’re serious about improving technical SEO, you need to conduct a technical SEO audit. Think of it as a health check-up for your website.

Being negligent about technical SEO can result in plummeting traffic and revenue.

We recommend performing a mini-site audit every month and a full-scale technical audit every four to five months.

Google bots should be able to crawl and index all your website pages, including breadcrumbs and your website’s XML sitemap. Regardless of the type of navigation your website has, it must have a clean URL structure.

For instance, you want to have a logical parent-child page structure. Let’s say you run a home decor store. A parent URL might be: https://lovemyhome.com/bedroom and contain pages with bedroom furniture and decor. Therefore, a child URL might look like this: https://lovemyhome.com/bedroom/side-tables.

You also want to avoid orphan pages. This is when a website page is not linked to any other page or section on your site. It communicates to search engines that it’s unimportant.

Then, when it comes to primary navigation, remember to include your service and solution-focused pages, and don’t insert more than 30 links on these pages to preserve link juice.

There are two components to auditing site architecture. The first is to do with technical factors like fixing broken links, and the second has to do with user experience. Google’s software makes it relatively easy to conduct the first.

However, when it comes to UX, human feedback helps. If you’re struggling to view your website with fresh eyes, ask family and friends to review it. The less they know about UX, the better, as they more than likely represent everyday users.

Ask yourself (and them):

- Is my website clear?

- Does my main navigation bar link to the most important pages?

- Is it easy for visitors to contact me?

- Can a visitor understand what my business is about and how I can help them just by visiting my website?

Step 02: Check for Duplicate Content

Using top-tier software like SEMRush, Moz or Screamingfrog is the best way to check for duplicate content. These SEO review tools will flag both external and internal content for specific web pages.

If you’re tempted to copy and paste sentences from your web copy into Google Search to see if other URLs pop up, don’t. This isn’t accurate and unnecessarily wastes precious time when there are far more sophisticated options available.

As we mentioned earlier, roughly 30% of all webpage content is duplicated. So, as always, the more original your content the better. Try to keep your duplicate content below this threshold.

Lastly, you’ll want to eliminate boilerplate content. This is when the same content is present on different website pages, like terms and conditions, for example. In this instance, it’s better to create a single webpage for T&Cs and ensure all the other pages have internal links to it.

Step 03: Check Site Speed and Mobile-Optimization With Google

You can use Google’s PageSpeed Insights to ensure your website is fast on all devices. Google’s software scores your website speed out of 100. What’s important to remember is that page speed is somewhat relative in the sense that people using a mobile on a 3G connection will experience “slowness” for every website they’re using.

Additionally, there is also a trade-off between speed and user experience. Some page elements will impact page speed. Of course, you should aim for the fastest speed possible, so long as you’re aware, which we’re sure you are after reading this article, that many factors affect search engine optimization SEO.

In terms of site speed analysis, it’s handy to analyze your competitors’ site speeds, so you know what you’re up against and can benchmark your results.

As for mobile optimization, Google also has a Mobile-Friendly Test that live-tests your URL. Its detailed report suggests improvement recommendations and directs you to Google Search Console for in-depth analysis and help.

Step 04: Collect and Redirect “Useless Pages”

None of your web pages should be useless. If they are, you need to get rid of them, especially if they’re placeholder pages. These look unprofessional and are bad for SEO. It’s honestly better to just launch your website when it’s ready.

Any web pages “under construction” with zero content won’t rank highly. What’s more, if they rank for keywords people are searching for, and they don’t offer value, your business immediately loses credibility. They serve no navigational purpose and get ignored by Google bots.

However, some web pages are useful for customers but not for Google in terms of pagination or index filters:

- Landing pages for ads

- Thank-you pages

- Privacy and policy pages

- Admin pages

- Duplicate pages

- Low-value pages (e.g., outdated content that may still be valuable)

You can either use a nonindex meta tag or a robots.txt file to prevent Google from indexing these pages. It can take time for Google to instigate these changes. Pages that still appear in results despite noindex directives may not have been crawled since your request. You can ask Google to recrawl these pages by using the Fetch As Googlebot.

Step 05: Check Structured Data

The only way to conduct a structured data audit is with software tools like Google Search Console, Screaming Frog, and Site Bulb. It’s possible to do it manually, but we don’t recommend this unless you are a technical SEO and/or have loads of time on your hands.

Using Google Search Console will tell you which pages have errors and identify where and/or how the error is occurring. But like any audit, structure data audits are about fixing errors and discovering SEO opportunities.

When conducting a structured data audit you’ll want to determine if any data is broken and if there’s anything more you can do. For example, if an eCommerce store has implemented a product review markup, but some products have fewer ratings, they might decide to remove the markup and avoid reputational damage until they’ve improved the product.

Top FREE Tools to Check Your Technical SEO

Every technical SEO specialist worth their salt knows how to conduct an SEO audit manually. However, it’s tedious and, given the tools on the market, unnecessary. Below are the tools we’d use to perform a high-quality technical SEO audit.

Google Search Console

This is hands down the best free-side audit tool. Google Search Console analyzes your website the same way that Google does with its bots. This feature alone makes it indispensable. With it, you can: monitor indexing and crawling, identify and fix errors, request indexing, update pages, and review all links.

Google Page Speed

This tool allows you to test web page speed. It is also so simple to use. All you need to do is enter the page’s URL and hit “analyze.” Google Page Speed’s detailed reports come with a personalized Optimization Score and a list of prioritized suggestions to improve running speed.

Google’s Structured Data Markup Helper

Compatible with the majority of search engines, Google’s Markup Helper helps you mark up different types of structured data on your website. This tool identifies missing fields that need to be filled in to correct the markup of your structured data. It offers a wide range of markup types and offers the possibility to generate rich snippets.

Ahrefs

The only caveat with Ahrefs is you won’t have full functionality unless you pay for a subscription, and have to connect your website to it, unlike Google, where you just copy and paste URLs. Otherwise, it’s useful to fix technical errors, submit sitemaps, and see structured data issues.

Ubersuggest

While Ubersuggest is mainly used for keyword research, it can perform full-scale audits to check your website for critical errors, warnings, and recommendations. What’s great about Ubersuggest is that it pinpoints areas that need urgent attention and prioritizes them accordingly.

Hire the Best SEO Professionals

Technical SEO and audits are mandatory for new and established websites. It’s normal to encounter technical SEO issues, most of which are easy to fix. However, you still need the technical know-how. This is where working with an SEO professional can save you time and help avoid costly delays.

Is your technical SEO up to date? If not, we’ll conduct a free SEO audit to identify underlying issues and offer recommendations. As a full-service digital marketing agency, we’ve improved our clients’ traffic by 175% on average! Call us today to fix your technical SEO issues.